Projekt Hazelcast jako podejście In-Memory Data Grid

Projekt Hazelcast jako podejście In-Memory Data Grid

Podejście In-Memory Data Grid to podejście w którym dane przekształcone są w obiekty które z kolei ładowane są do pamięci operacyjnej komputera. Rozwiązanie to zwiększa znacznie szybkość dostępu do danych, kosztem jednak jest spójność danych które mogą zostać rozsynchronizowane co nie ma miejsca w przypadku relacyjnych baz danych. Przykładem tego podejścia jest projekt Open Source – Hazelcast zaimplementowany wzorcowo w Javie, przystosowany do pracy w środowisku rozproszonym. Testy pokazują, że Hazelcast daje 56% wzrost szybkości w dostępie do danych oraz 44 % wzrost w zapisie danych. Tworzymy projekt!

spring-cache-hazelcast – niezbędne zależności:

<dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-jdbc</artifactId> </dependency> <dependency> <groupId>com.h2database</groupId> <artifactId>h2</artifactId> <scope>runtime</scope> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-cache</artifactId> </dependency> <dependency> <groupId>com.hazelcast</groupId> <artifactId>hazelcast</artifactId> </dependency> <dependency> <groupId>com.hazelcast</groupId> <artifactId>hazelcast-spring</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> </dependency> </dependencies>

W projekcie dodana została zależność do bazy H2, oraz do startera – starter-jdbc. Należy dodać plik schema-hsqldb.sql który zawiera definicje przykładowej tabeli która inicjowana będzie przy starcie aplikacji. Ponadto należy uzupełnić plik application.properties:

Plik ./resources/schema-hsqldb.sql zawiera definicje przykładowej tabeli Employee:

DROP TABLE IF EXISTS employee; CREATE TABLE employee ( empId VARCHAR(10) NOT NULL, empName VARCHAR(100) NOT NULL );

Plik application.properties:

spring.datasource.url = jdbc:h2:file:./Database;DB_CLOSE_ON_EXIT=FALSE;AUTO_SERVER=TRUE spring.datasource.platform = hsqldb spring.h2.console.enabled = true spring.datasource.username = sa spring.datasource.password = server.port = 0

wpis:

server.port = 0

oznacza, że aplikacja zostanie uruchomiona na losowym porcie, wpis ten jest ważny ze względu na konflikt portów.

wpis:

spring.datasource.platform = hsqldb

oznacza, że zostanie uruchomiony zdefiniowany wcześniej plik SQL dla bazy H2.

wpis:

spring.h2.console.enabled = true

oznacza, że pod adresem:

localhost:8080/h2-console

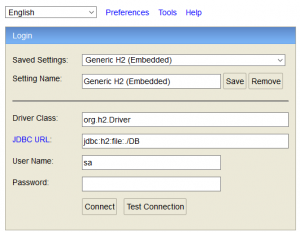

będziemy mieli graficzny dostęp do konsoli bazy H2:

Klasa konfiguracyjna:

@Configuration public class HazelcastConfiguration { @Bean public Config hazelCastConfig(){ return new Config() .setInstanceName("hazelcast-instance") .addMapConfig( new MapConfig() .setName("employees") .setMaxSizeConfig(new MaxSizeConfig(200, MaxSizeConfig.MaxSizePolicy.FREE_HEAP_SIZE)) .setEvictionPolicy(EvictionPolicy.LRU) .setTimeToLiveSeconds(20)); } }

Klasa modelu:

public class Employee implements Serializable { private String empId; private String empName; // getters & setters @Override public String toString() { return "Employee [empId=" + empId + ", empName=" + empName + "]"; } }

Interfejs przedstawiający operacje dokonywane na klasie Employee:

public interface EmployeeDao { void insertEmployee(Employee cus); void insertEmployees(List<Employee> employees); List<Employee> getAllEmployees(); Employee getEmployeeById(String empId); }

Implementacja powyższego interfejsu:

@Repository public class EmployeeDaoImpl extends JdbcDaoSupport implements EmployeeDao { @Autowired DataSource dataSource; @PostConstruct private void initialize(){ setDataSource(dataSource); } @Override public void insertEmployee(Employee emp) { String sql = "INSERT INTO employee " + "(empId, empName) VALUES (?, ?)" ; getJdbcTemplate().update(sql, new Object[]{ emp.getEmpId(), emp.getEmpName() }); } @Override public void insertEmployees(List<Employee> employees) { String sql = "INSERT INTO employee " + "(empId, empName) VALUES (?, ?)"; getJdbcTemplate().batchUpdate(sql, new BatchPreparedStatementSetter(){ public void setValues(PreparedStatement ps, int i) throws SQLException { Employee employee = employees.get(i); ps.setString(1, employee.getEmpId()); ps.setString(2, employee.getEmpName()); } public int getBatchSize() { return employees.size(); } }); } @Override public List<Employee> getAllEmployees(){ String sql = "SELECT * FROM employee"; List<Map<String, Object>> rows = getJdbcTemplate().queryForList(sql); List<Employee> result = new ArrayList<Employee>(); for(Map<String, Object> row:rows){ Employee emp = new Employee(); emp.setEmpId((String)row.get("empId")); emp.setEmpName((String)row.get("empName")); result.add(emp); } return result; } @Override public Employee getEmployeeById(String empId) { String sql = "SELECT * FROM employee WHERE empId = ?"; return (Employee)getJdbcTemplate().queryForObject(sql, new Object[] {empId}, new RowMapper<Employee>(){ @Override public Employee mapRow(ResultSet rs, int rwNumber) throws SQLException { Employee emp = new Employee(); emp.setEmpId(rs.getString("empId")); emp.setEmpName(rs.getString("empName")); return emp; } }); } }

Serwis dla operacji DAO:

public interface EmployeeService { void insertEmployee(Employee emp); void insertEmployees(List<Employee> employees); List<Employee> getAllEmployees(); void getEmployeeById(String empid); void clearCache(); }

@Repository @CacheConfig(cacheNames = "employees") public class EmployeeServiceImpl implements EmployeeService { @Autowired EmployeeDao employeeDao; @Override public void insertEmployee(Employee employee) { employeeDao.insertEmployee(employee); } @Override public void insertEmployees(List<Employee> employees) { employeeDao.insertEmployees(employees); } @Override @Cacheable(value = "getAllEmployeesCache") // clear cache before get all employees @CachePut(value="getAllEmployeesCache") public List<Employee> getAllEmployees() { System.out.println("Inside the service layer"); return employeeDao.getAllEmployees(); } @CacheEvict(value="getAllEmployeesCache", allEntries=true) public void clearCache(){} @Override public void getEmployeeById(String empId) { Employee employee = employeeDao.getEmployeeById(empId); System.out.println(employee); } }

Czas na testy!

class HelperMeasureTime { public static long getNanoTime() { return nanoTime(); } } @SpringBootApplication @EnableCaching public class SpringCacheHazelcastApplication { @Autowired EmployeeService employeeService; private static final Logger logger = LoggerFactory.getLogger(SpringCacheHazelcastApplication.class); public static void main(String[] args) { String logFormat = "%s call took %d millis with result: %s"; ApplicationContext context = SpringApplication.run( SpringCacheHazelcastApplication.class, args); EmployeeService employeeService = context.getBean(EmployeeService.class); employeeService.clearCache(); Employee emp= new Employee(); emp.setEmpId("emp"); emp.setEmpName("emp"); Employee emp1= new Employee(); emp1.setEmpId("emp1"); emp1.setEmpName("emp1"); Employee emp2= new Employee(); emp2.setEmpId("emp2"); emp2.setEmpName("emp2"); employeeService.insertEmployee(emp); List<Employee> employees = new ArrayList<>(); employees.add(emp1); employees.add(emp2); employeeService.insertEmployees(employees); // ************************************************ long start_time_1 = HelperMeasureTime.getNanoTime(); System.out.println("call to service first time"); List<Employee> employeeList1 = employeeService.getAllEmployees(); for (Employee employee : employeeList1) { System.out.println(employee.toString()); } long end_time_1 = HelperMeasureTime.getNanoTime(); long duration = end_time_1 - start_time_1; logger.info(format(logFormat, "BEFORE CACHING", TimeUnit.NANOSECONDS.toMillis(duration), employeeList1)); // ************************************************ long start_time_2 = HelperMeasureTime.getNanoTime(); System.out.println("call service with hazelcast"); List<Employee> employeeList2 = employeeService.getAllEmployees(); for (Employee employee : employeeList2) { System.out.println(employee.toString()); } long end_time_2 = HelperMeasureTime.getNanoTime(); long duration2 = end_time_2 - start_time_2; logger.info(format(logFormat, "AFTER CACHING", TimeUnit.NANOSECONDS.toMillis(duration2), employeeList2)); // ************************************************ // employeeService.clearCache(); long start_time_3 = HelperMeasureTime.getNanoTime(); Employee employeeTewmp = new Employee(); employeeTewmp.setEmpId("new-emp"); employeeTewmp.setEmpName("new-employee"); employeeService.insertEmployee(employeeTewmp); System.out.println("call service with hazelcast"); List<Employee> employeeList3 = employeeService.getAllEmployees(); for (Employee employee : employeeList3) { System.out.println(employee.toString()); } long end_time_3 = HelperMeasureTime.getNanoTime(); long duration3 = end_time_3 - start_time_3; logger.info(format(logFormat, "AFTER CACHING", TimeUnit.NANOSECONDS.toMillis(duration3), employeeList3)); // ************************************************ // * fill & read map // ************************************************ HazelcastInstance hzInstance = Hazelcast.newHazelcastInstance(); Map<Long, String> map = hzInstance.getMap("data"); map.clear(); IdGenerator idGenerator = hzInstance.getIdGenerator("newid"); for (int i = 0; i < 10; i++) { map.put(idGenerator.newId(), "message" + 1); } long start_time_4 = HelperMeasureTime.getNanoTime(); System.out.println(map.keySet() + " " + map.values()); long end_time_4 = HelperMeasureTime.getNanoTime(); long duration4 = end_time_4 - start_time_4; logger.info(format(logFormat, "BEFORE CACHING", TimeUnit.NANOSECONDS.toMillis(duration4), map.toString())); long start_time_5 = HelperMeasureTime.getNanoTime(); System.out.println(map.keySet() + " " + map.values()); long end_time_5 = HelperMeasureTime.getNanoTime(); long duration5 = end_time_5 - start_time_5; logger.info(format(logFormat, "AFTER CACHING", TimeUnit.NANOSECONDS.toMillis(duration5), map.toString())); map.put(idGenerator.newId(), "new data to map first"); map.put(idGenerator.newId(), "new data to map second"); long start_time_6 = HelperMeasureTime.getNanoTime(); System.out.println(map.keySet() + " " + map.values()); long end_time_6 = HelperMeasureTime.getNanoTime(); long duration6 = end_time_6 - start_time_6; logger.info(format(logFormat, "AFTER CACHING", TimeUnit.NANOSECONDS.toMillis(duration6), map.toString())); } }

Wyniki testów są następujące:

2019-07-09 09:47:37.299 INFO 51308 --- [main] p.j.s.SpringCacheHazelcastApplication : BEFORE CACHING call took 31 millis with result:

2019-07-09 09:47:37.317 INFO 51308 --- [main] p.j.s.SpringCacheHazelcastApplication : AFTER CACHING call took 18 millis with result:

2019-07-09 09:47:37.333 INFO 51308 --- [main] p.j.s.SpringCacheHazelcastApplication : AFTER CACHING call took 14 millis with result:

Wzrost jest na poziomie 54%.

2019-07-09 09:35:30.744 INFO 41000 --- [main] p.j.s.SpringCacheHazelcastApplication:BEFORE CACHING call took 71 millis with result: IMap{name='data'}

2019-07-09 09:35:30.778 INFO 41000 --- [main] p.j.s.SpringCacheHazelcastApplication: AFTER CACHING call took 33 millis with result: IMap{name='data'}

2019-07-09 09:35:30.808 INFO 41000 --- [main] p.j.s.SpringCacheHazelcastApplication: AFTER CACHING call took 13 millis with result: IMap{name='data'}

Wzrost jest na poziomie 80%.

Leave a comment